Chain-of-thought (CoT) prompting reveals that large language models are capable of performing complex reasoning via intermediate steps. CoT methods in large language models typically use two prompting paradigms: Zero-shotCoT, approach utilizes straightforward prompts like “Let’s think step by step” to generate a sequential thought process before yielding an answer. Whereas the Few-shot-CoT approach makes use of human-crafted, step-by-step demonstrations to guide the model’s reasoning process.

This approach sometimes leads to reasoning errors to reasoning errors, highlighting the need to diversify demonstrations to mitigate its misleading effects. However, diverse demonstrations pose challenges for effective representations.

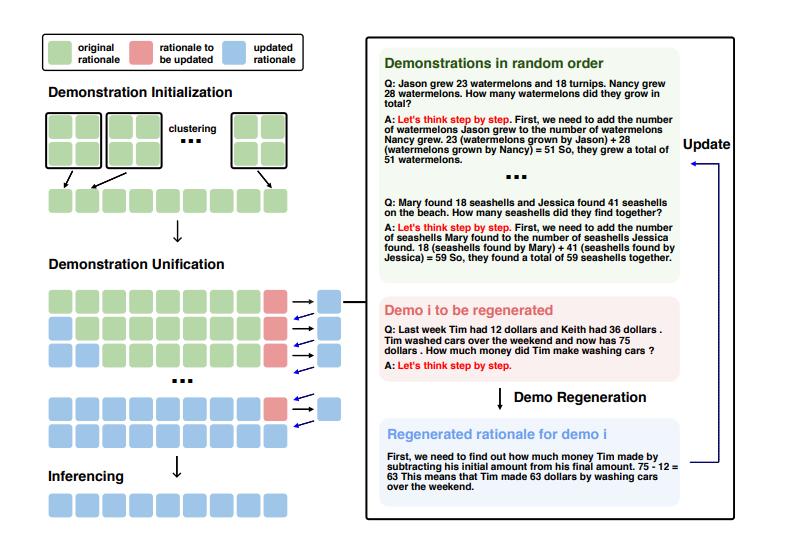

To address this researchers have proposed ECHO (Self-Harmonized Chain of Thought), a self-harmonized chain-of-thought prompting method. ECHO consists of three main steps: * question clustering: partition questions of a given dataset into a few clusters based on their similarity * demonstration sampling: select a representative question from each cluster and generate its reasoning chain using Zero-shotCoT. * demonstration unification: one demonstration is randomly selected for rationale update in each iteration, while the remaining demonstrations serve as in-context examples.

This unification process forces each rationale to learn from the remaining ones to build a coherent pattern. The process iteratively cycles through each demonstration once per iteration and continues over multiple iterations

During evaluation across three different reasoning domains ECHO demonstrates better overall performance (+2.8%) than other baselines. ECHO diverse solution paths into a uniform and effective solution pattern.

Paper : https://arxiv.org/pdf/2409.04057