Structure information is critical for understanding the semantics of text-rich images, such as documents, tables, and charts. Most of Existing Multimodal Large Language Models (MLLMs) for Visual Document Understanding consist of a pre-trained visual encoder (e.g. the ViT from CLIP ) and the LLM with a Vision-toText (V2T) module presenting a promising performance on text recognition ability but lack general structure understanding abilities for text-rich document images.

For better Visual Document Understanding with MLLMs researchers have proposed Unified Structure Learning on text-rich images for MLLMs and design both structure-aware parsing tasks and multi-grained text localization tasks across 5 domains: document, webpage, table, chart, and natural image.

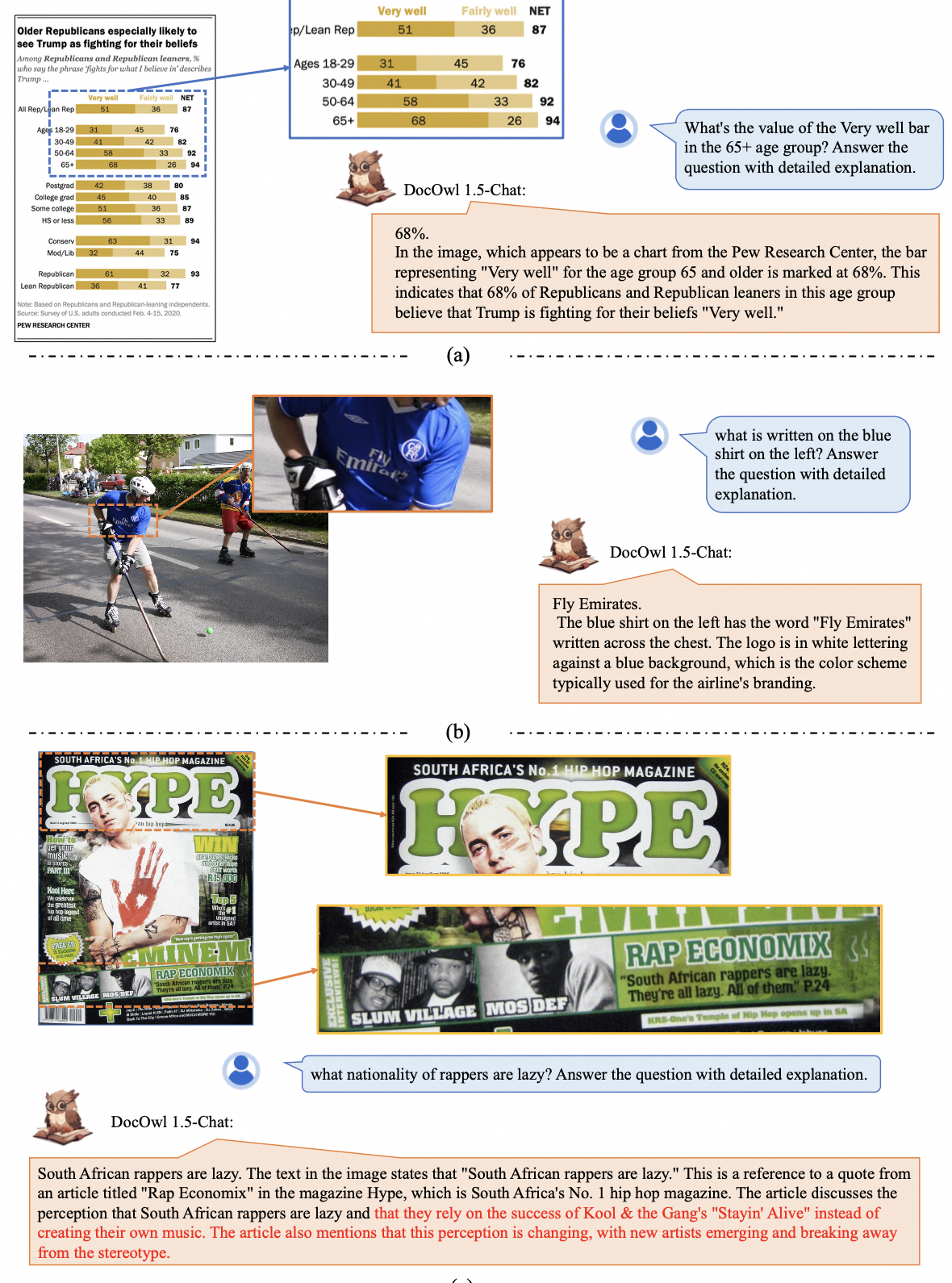

To better encode structure information, a simple and effective SOTA model DocOwl 1.5 was built. DocOwl 1.5 follows the typical architecture of MLLMs, which consists of a visual encoder, a vision-to-text module, and a large language model as the decoder. To better keep the textual and layout information in text-rich images of high resolution, vision-to-text module H-Reducer was design, which can not only maintain the layout information but also reduce the length of visual features by merging horizontal adjacent patches through convolution, enabling the LLM to understand high-resolution images more efficiently.

Finally, to enhance the text recognition and structure understanding abilities, Unified Structure Learning is performed with structure-aware parsing and multi-grained text localization tasks for all types of images. Then, the model is jointly tuned on multiple downstream tasks of Visual Document understanding.

DocOwl 1.5 achieves state-of-the-art performance on 10 visual document understanding benchmarks, covering documents (DocVQA, InfoVQA, DeepForm, KLC ), tables (WTQ, TabFact), charts (ChartQA), natural images (TextVQA, TextCaps), and webpage screenshots (VisualMRC). improving the SOTA performance of MLLMs with a 7B LLM by more than 10 points in 5/10 benchmarks.