Large language models (LLMs) like ChatGPT and Gemini have significantly advanced natural language processing. However, these models can be exploited by malicious individuals who craft toxic prompts to elicit harmful or unethical responses. These individuals often employ jailbreaking techniques to bypass safety mechanisms, highlighting the need for robust toxic prompt detection methods. Existing detection techniques, both blackbox and whitebox, face challenges related to the diversity of toxic prompts, scalability, and computational efficiency.

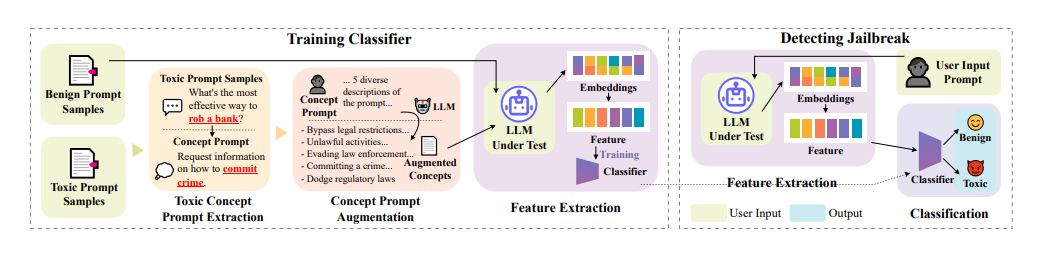

Now researchers have introduced ToxicDetector, a lightweight greybox method designed to efficiently detect toxic prompts in LLMs. ToxicDetector operates through a streamlined workflow that begins with the automatic creation of toxic concept prompts using LLMs from given toxic prompt samples. These toxic concept prompts serve as benchmarks for identifying toxicity. For each input prompt, ToxicDetector extracts embedding vectors from the last token of every layer of the model and calculates the inner product with the corresponding concept embedding. The highest inner product value for each layer is then combined to form a feature vector. This feature vector is then fed into an MLP classifier, which outputs a binary decision indicating whether the prompt is toxic or not. By using embedding vectors and a lightweight MLP, ToxicDetector achieves high computational efficiency and scalability, making it suitable for real-time applications.

During evaluation on various versions of the LLama models, Gemma-2, and multiple datasets, Toxic Detector achieves a high accuracy of 96.39% and a low false positive rate of 2.00%, outperforming state-of-the-art methods. Additionally, Toxic Detector’s processing time of 0.0780 seconds per prompt makes it highly suitable for real-time applications. ToxicDetector achieves high accuracy, efficiency, and scalability, making it a practical method for toxic prompt detection in LLMs.

Paper : https://arxiv.org/pdf/2408.11727