There is an emerging paradigm shift in how AI systems are built these days. The new generation of AI applications are increasingly compound systems involving multiple sophisticated components, where each component could be an LLM-based agent, a tool such as a simulator, or web search. As a result, developing principled and automated optimization methods for such compound AI systems is one of the most important new challenges.

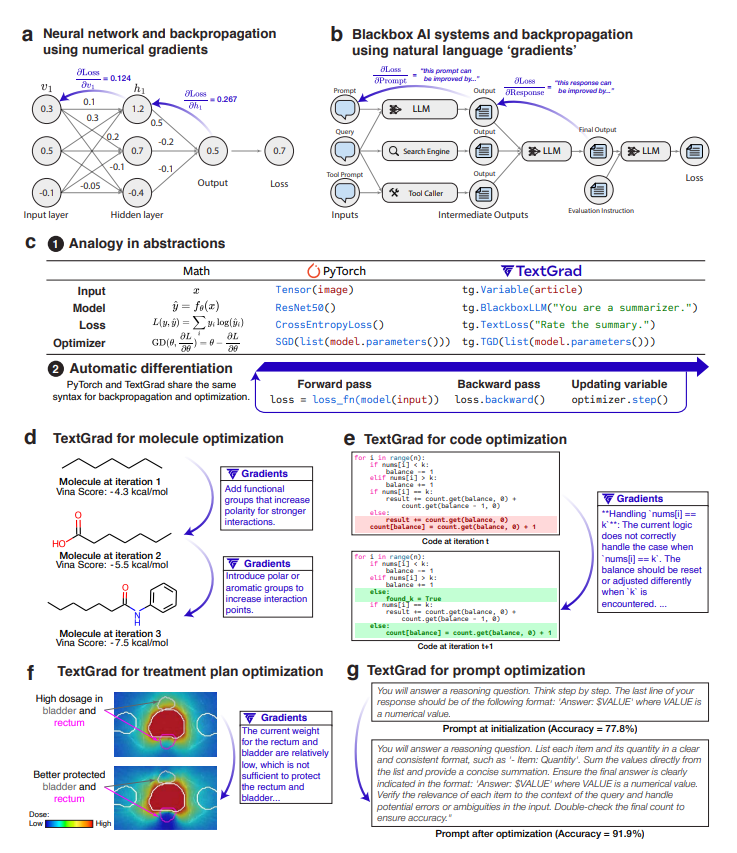

To optimize the new generation of AI systems, researchers from Stanford have introduced TEXTGRAD, a powerful framework performing automatic “differentiation” via text. Here we use differentiation and gradients as a metaphor for textual feedback from LLMs. In this framework, each AI system is transformed into a computation graph, where variables are inputs and outputs of complex (not necessarily differentiable) function calls. The feedback to the variables (dubbed ‘textual gradients’) are provided in the form of informative and interpretable natural language criticism to the variables; describing how a variable should be changed to improve the system. The gradients are propagated through arbitrary functions, such as LLM API calls, simulators, or external numerical solvers.

In general, TEXTGRAD backpropagation textual feedback provided by LLMs to improve individual components of a compound AI system. In TEXTGRAD framework, LLMs provide rich, general, natural language suggestions to optimize variables in computation graphs, ranging from code snippets to molecular structures. TEXTGRAD follows PyTorch’s syntax and abstraction and is flexible and easy-to-use. It works out-of-the-box for a variety of tasks, where the users only provide the objective function without tuning components or prompts of the framework.

TEXTGRAD’s effectiveness and generality were evaluated across a diverse range of applications, from question answering and molecule optimization to radiotherapy treatment planning. Without modifying the framework, TEXTGRAD improves the zero-shot accuracy of GPT-4o in Google-Proof Question Answering from 51% to 55%, yields 20% relative performance gain in optimizing LeetCode-Hard coding problem solutions, improves prompts for reasoning, designs new druglike small molecules with desirable in silico binding, and designs radiation oncology treatment plans with high specificity. TEXTGRAD lays a foundation to accelerate the development of the next-generation of AI systems.

Paper : https://arxiv.org/pdf/2406.07496