Recent advancements in Large Language Models (LLMs) have predominantly focused on a limited set of data-rich languages, with training datasets being notably skewed towards English. Most of the existing multilingual LLMs models often suffer from significant trade-offs between performance, efficiency, and scalability, particularly when extending support across a broader spectrum of languages. Models such as BLOOM and Llama2, typically underperform in languages that are less represented in the training data due to the difficulty of balancing language-specific nuances whereas language-specific LLMs like HyperClova in Korean or OpenHaathi in Hindi are bit challenging due to the exponential data and training requirements.

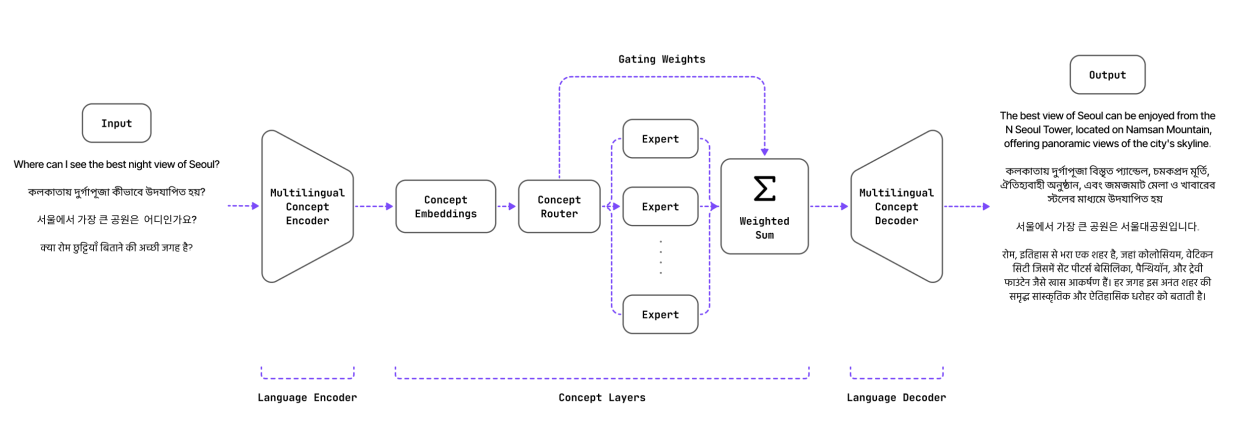

To overcome this challenge researchers have introduced SUTRA (Sanskrit for “thread”), a transformative approach in the architecture of multilingual LLMs. SUTRA is a novel multilingual large language model architecture that is trained by decoupling concept learning from language learning. The input is processed through a multilingual concept encoder, followed by the concept model and finally through a multilingual concept decoder to generate the output response.This architecture enables the core model to focus on universal language agnostic concepts while leveraging specialized neural machine translation (NMT) mechanisms for language-specific processing, thus preserving linguistic nuances without compromising the model’s scalability or performance.

Further, SUTRA employs a Mixture of Experts (MoE) strategy, enhancing the model’s efficiency by engaging only the relevant experts based on the linguistic task at hand. MoE Layer is configured in such a way that the Input vectors are routed to a subset of the available experts, specifically 2 out of 8, by a specialized router. The aggregate output of this layer is the sum of the individual outputs, each weighted accordingly. Each expert comprises a feedforward module similar to those found in conventional transformer models.

In conclusion, a combination of multilingual skills, online connectivity, and efficiency in language generation incorporated by SUTRA models promises to redefine the landscape of multilingual language modeling.

Paper : https://arxiv.org/pdf/2405.06694