Extracting structured information from unstructured text lies at the core of many Gen AI problems such as Information Retrieval, Knowledge Graph Construction, Knowledge Discovery, Automatic Text Summarization and so on. In most of such applications Entity Linking (EL) and Relation Extraction (RE) are very critical components, which are governed by three fundamental properties: Inference Speed, Flexibility, and Performance. While tremendous progress has recently been made on both EL and RE however these approaches only focus on at most two out of the aforementioned three properties simultaneously, hindering their applicability in multiple scenarios.

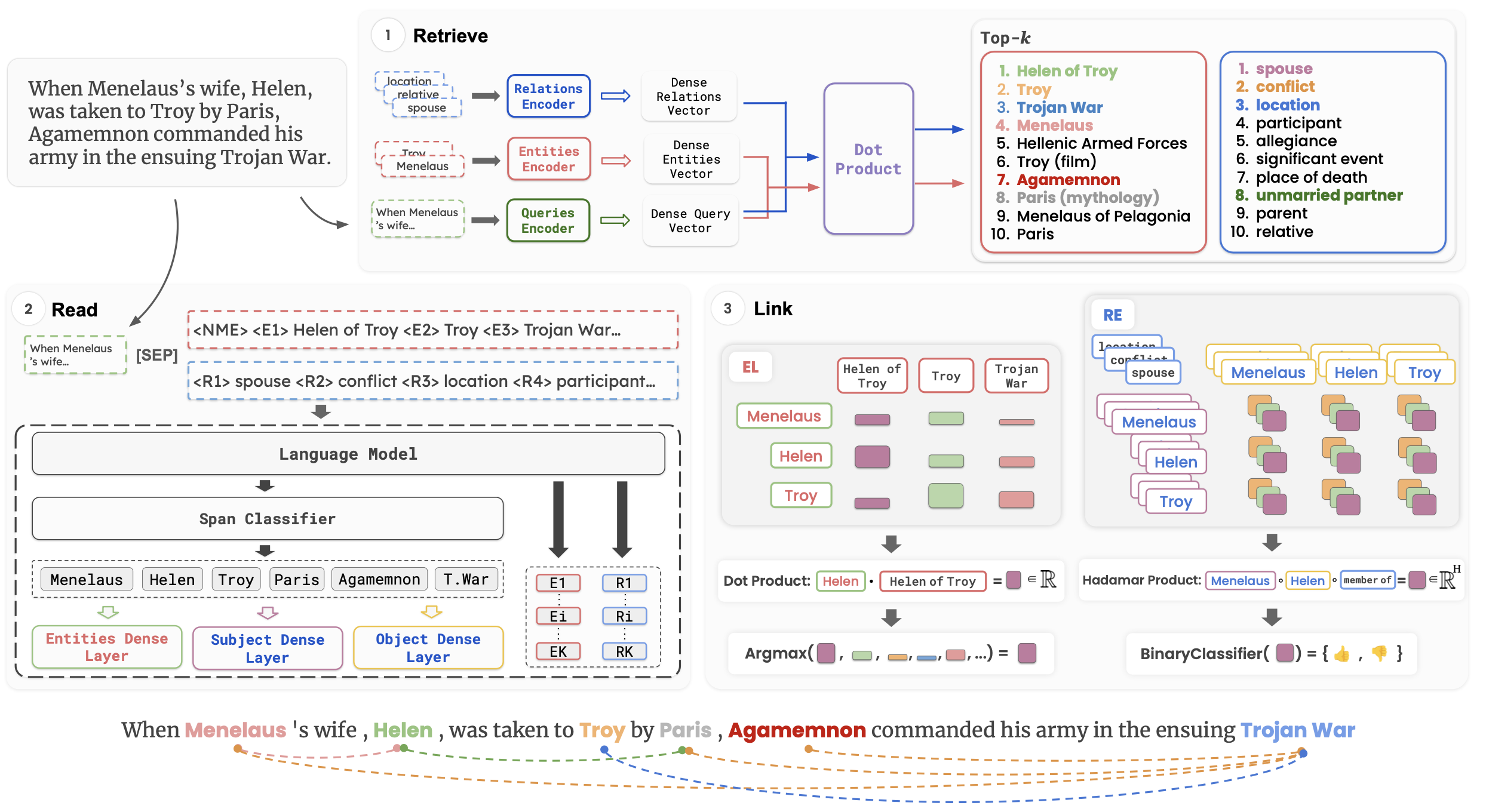

To address this researchers have introduced ReLiK, a Retriever-Reader architecture for both EL and RE, which can be divided into two main components: * The Retriever module, that is tasked to retrieve the possible Entities/Relations that can be extracted from a given input text. * The Readermodule, given the original input text and all the retrieved Entities/Relations (output of the Retriever), is tasked to connect them to the relevant spans in the text.

ReLiK enhances Inference Speed, Flexibility, and Performance of AI system by integrating several innovative features: 1. It uses a nonparametric memory with a Retriever component, reducing the number of parameters needed while maintaining high performance and fast inference speed. 2. It employs textual representations for entities and relations, enabling the model to handle unseen entities and relations more flexibly. 3. Its novel input formulation maximizes the contextualization capabilities of advanced language models like DeBERTa-v3, leading to improved performance and processing speed through simultaneous encoding and extraction of text and entities.

Paper : https://arxiv.org/pdf/2408.00103

Code : https://github.com/SapienzaNLP/relik?tab=readme-ov-file