The instruction-following ability of large language models enables humans to interact with AI agents in a natural way. However, when required to generate responses of a specific length, large language models often struggle to meet users’ needs due to their inherent difficulty in accurately perceiving numerical constraints.

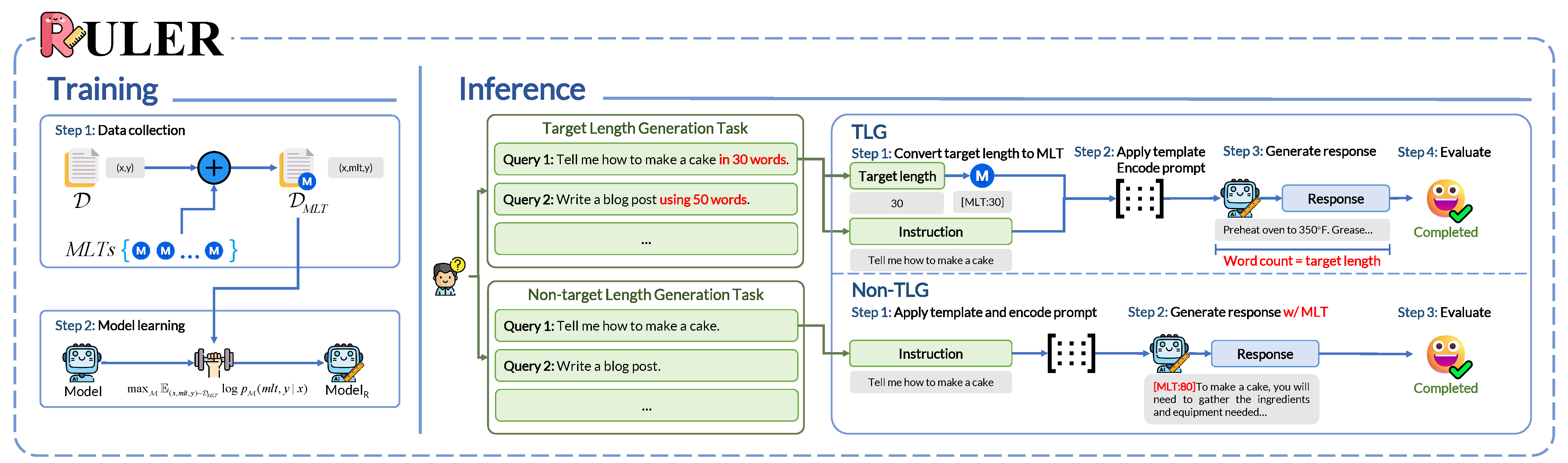

To explore the ability of large language models to control the length of generated responses, we propose the Target Length Generation Task (TLG) and design two metrics, Precise Match (PM) and Flexible Match (FM) to evaluate the model’s performance in adhering to specified response lengths. Furthermore, researchers have also introduced RULER as a model-agnostic method designed to improve the ability of large language models (LLMs) to follow instructions, particularly in generating responses of specific lengths. It uses Meta Length Tokens (MLTs) to control response lengths, enabling LLMs to meet target lengths during both training and inference. A dataset, DMLT, was created for training, where models learn to generate MLTs and corresponding responses. During inference, RULER generates MLTs based on the desired response length or creates one if none is provided.

Specifically, RULER equips LLMs with the ability to generate responses of a specified length based on length constraints within the instructions. Moreover, RULER can automatically generate appropriate MLT when length constraints are not explicitly provided, demonstrating excellent versatility and generalization.

Comprehensive experiments show the effectiveness of RULER across different LLMs on Target Length Generation Task, e.g., at All Level 27.97 average gain on PM, 29.57 average gain on FM. In addition, we conduct extensive ablation experiments to further substantiate the efficacy and generalization of RULER.