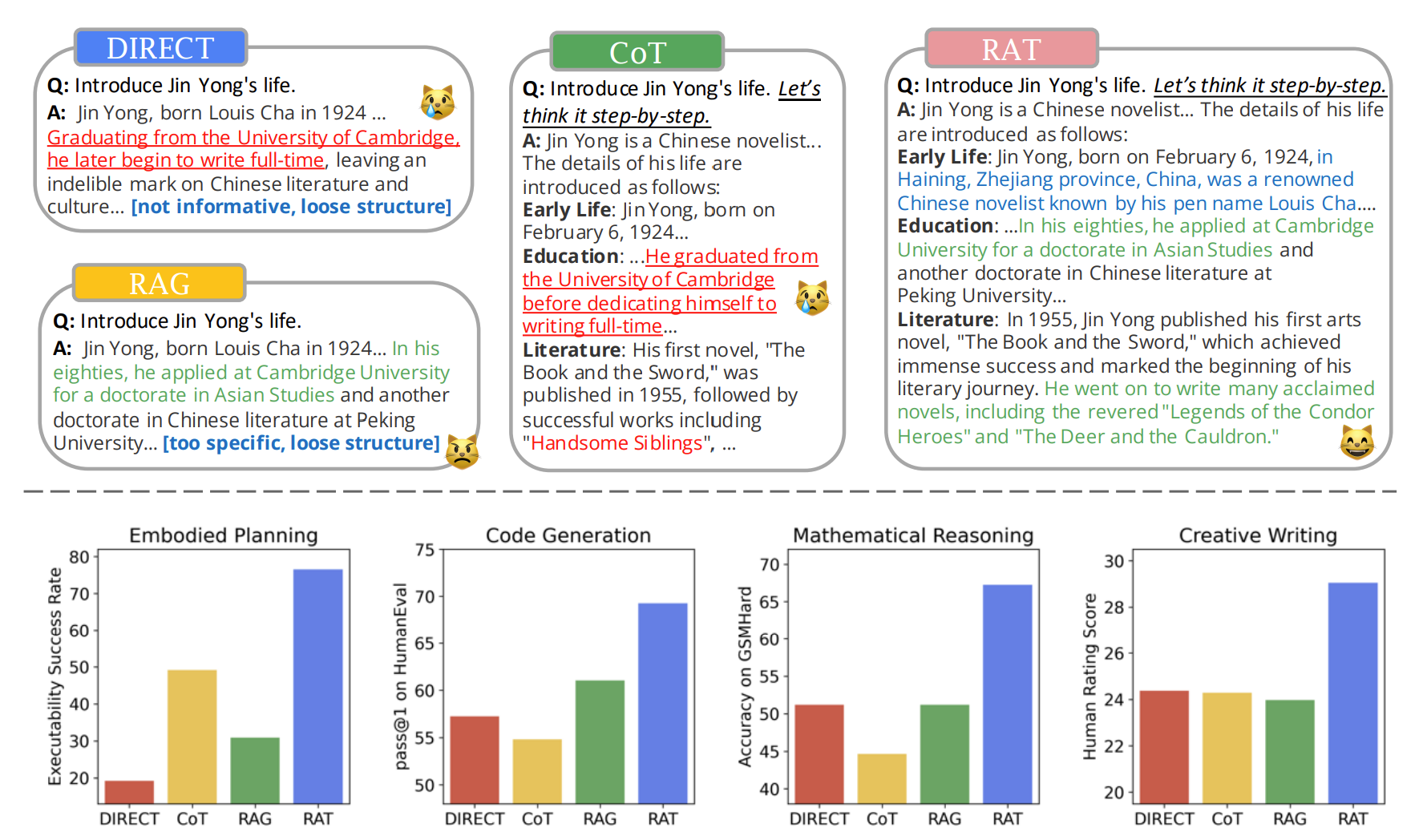

Factual correctness has been one of the growing concerns around LLMs reasoning capabilities. This issue becomes more significant when it comes to zero-shot CoT (Chain of Thought) prompting, aka. “let’s think step-by-step” and long-horizon generation tasks that require multi-step and context-aware reasoning, including code generation, task planning, mathematical reasoning, etc.

Several prompting techniques have been proposed to mitigate this issue, one promising direction, Retrieval Augmented Generation (RAG), which utilizes retrieved information to facilitate more factually grounded reasoning.

So can we synergize RAG with prompting techniques such as CoT for sophisticated long-horizon reasoning ?

Well that is what researchers have done with retrieval-augmented thoughts (RAT) prompting strategy. RAT comprises two key steps: (1) First initial a zero-shot CoT produced by LLMs along with the original task prompt and used it as queries to retrieve the information that could help revise the possibly flawed CoT. (2) Now instead of retrieving and revising with the full CoT and producing the final response at once, used the LLMs to produce the response step-by-step following the CoT (a series of subtasks), and revised only the current thought step based on the information retrieved with task prompt, the current and the past CoTs.

During experimentation several LLMs of varied scales: GPT-3.5 , GPT-4 and CodeLLaMA7b were used with RAT. The results indicate that combining RAT with these LLMs elicits strong advantages over vanilla CoT prompting and RAG approaches. In particular, performances across following selection of tasks was measured : (1) code generation: HumanEval (+20.94%), HumanEval+ (+18.89%), MBPP (+14.83%), MBPP+ (+1.86%); (2) mathematical reasoning problems: GSM8K (+8.36%), and GSMHard (+31.37%); (3) Minecraft task planning (2.96 times on executability and +51.94% on plausibility);( 4) creative writing (+19.19% on human score). Nevertheless, RAT reveals how LLMs revise their reasoning process in a zero-shot fashion with the help of outside knowledge, just as what humans do.