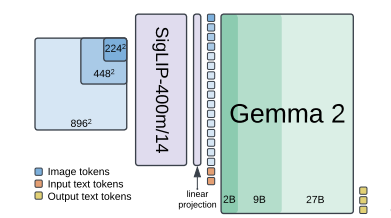

Google has released the PaliGemma 2 family of models, an upgrade of the PaliGemma open Vision-Language Model (VLM) based on the Gemma 2 family of language models. PailGemma 2 combines the SigLIP-So400m vision encoder with the whole range of Gemma 2 models, from the 2B one all the way up to the 27B model.

PaliGemma 2 models is pretrained with a vocabulary that includes localization tokens (for detection) and segmentation tokens (to define a binary mask inside a bounding box) at three resolutions (224px2 , 448px2 and 896px2 ) in multiple stages to equip them with broad knowledge for transfer via fine-tuning. The resulting family of base models covering different model sizes and resolutions allows us to investigate factors impacting transfer performance (such as learning rate) and to analyze the interplay between the type of task, model size, and resolution. PaliGemma 2 processes a 224px2 / 448px2 /896px2 image with a SigLIP-400m encoder with patch size 14px2 , yielding 256/1024/ 4096 tokens. After a linear projection, the image tokens are concatenated with the input text tokens and Gemma 2 autoregressively completes this prefix with an answer.

PaliGemma 2 models were evaluated by increasing the number and breadth of transfer tasks beyond the scope of PaliGemma including different OCR-related tasks such as table structure recognition, molecular structure recognition, music score recognition, as well as long fine-grained captioning and radiography report generation, on which PaliGemma 2 obtains state-of-the-art results.

Paper : PaliGemma 2: A Family of Versatile VLMs for Transfer