Large language models (LLMs) often hallucinate and lack the ability to provide attribution for their generations. Semi-parametric LMs, such as kNN-LM, approach these limitations by refining the output of an LM for a given prompt using its nearest neighbor matches in a non-parametric data store. However, these models often exhibit slow inference speeds and produce non-fluent texts.

To address these challenges researchers from Meta have introduced Nearest Neighbor Speculative Decoding (Nest), a novel semi-parametric language modeling approach that is capable of incorporating real-world text spans of arbitrary length into the LM generations and providing attribution to their sources.

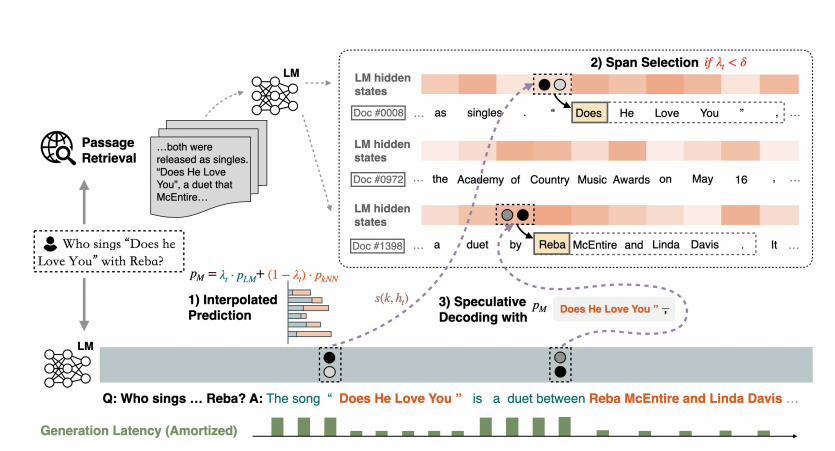

The Nest approach first locates the tokens in the corpus using the LM hidden states. The retrieval distribution pk-NN is dynamically interpolated with pLM based on the retriever’s uncertainty λt. The token and its n-gram continuation are then selected from the mixture distribution pM, while the final span length is determined by speculative decoding to remove undesired tokens. The spans incorporated in the final generation provide direct attribution and amortize the generation latency.

At each inference step, Nest performs content generation with three sub-steps: * Confidence-based interpolation: Adjusts output probabilities using a Relative Retrieval Confidence score, allowing dynamic adaptation to different tasks. * Dynamic span selection: Extends token selection to include a span of text when confidence in retrieval exceeds a threshold. * Relaxed speculative decoding: Evaluates selected spans based on mixture probability, accepting only highly probable prefixes.

Nest significantly enhances the generation quality and attribution rate of the base LM across a variety of knowledge-intensive tasks, surpassing the conventional kNN-LM method and performing competitively with in-context retrieval augmentation. In addition, Nest substantially improves the generation speed, achieving a 1.8× speedup in inference time when applied to Llama-2-Chat 70B.

Paper : https://arxiv.org/pdf/2405.17976