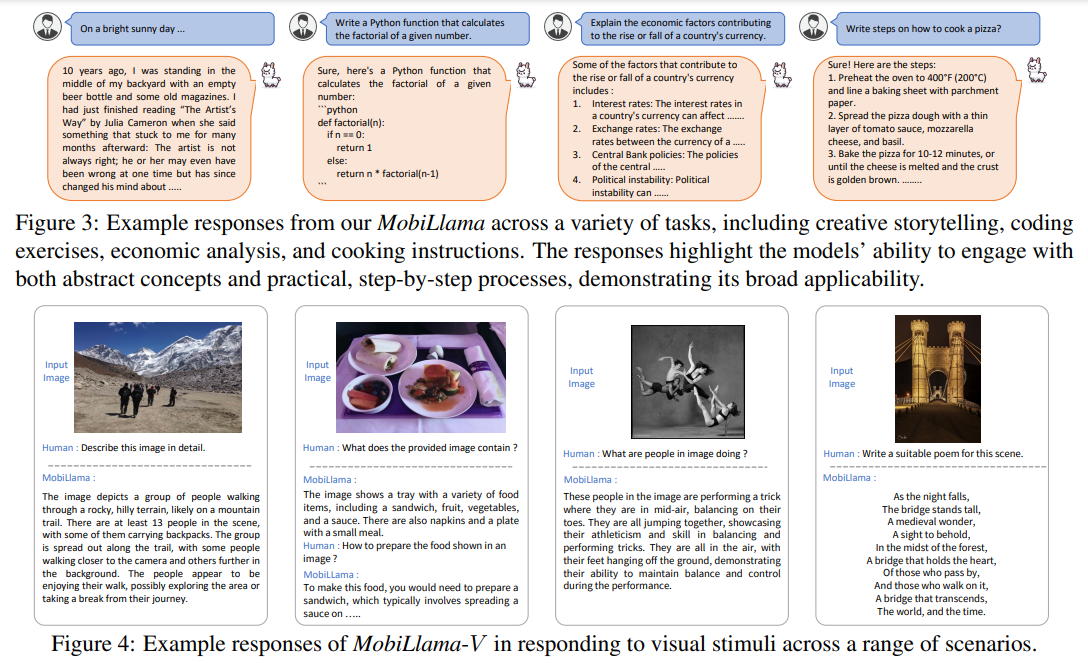

MobiLlama, another Small Language Models (SLMs) for resource constrained devices. MobileLlama is a SLM design that initiates from a larger model and applies a careful parameter sharing scheme to reduce both the pre-training and the deployment cost. MobileLlama was trained using the Amber data sources.

MobiLlama, with an aim to develop accurate SLMs by alleviating the redundancy in the transformer blocks. Different from the conventional SLM design where dedicated feed forward layers (FFN) are typically allocated to each transformer block, it employs a shared FFN design for all the transformer blocks within SLM.

MobiLlama 0.5B and 0.8B models outperform OLMo-1.17B and TinyLlama-1.1B in terms of pre-training tokens, pre-training time and memory, model parameters, overall accuracy across nine benchmarks and on-device efficiency. MobiLlama achieves comparable accuracy while requiring significantly fewer pre-training data (1.2T tokens vs. 3T tokens), lesser pre-training time and GPU memory along with being efficient in terms of deployment on a resource constrained device

Paper : https://lnkd.in/gR2RzMiF

Code : https://lnkd.in/gg_-ArZt

Model : https://lnkd.in/gXegFw-8