LLM empowered multi-modality inputs are becoming an essential part of Vision Language Models (VLMs) such as LLaVA and Otter. However, despite these advancements, a significant gap remains between academic initiatives and the prowess of well-established models like GPT-4 and Gemini, which are trained with huge amounts of data and resources.

So how to push forward the VLMs approaching well-developed models with acceptable cost in an academic setting?

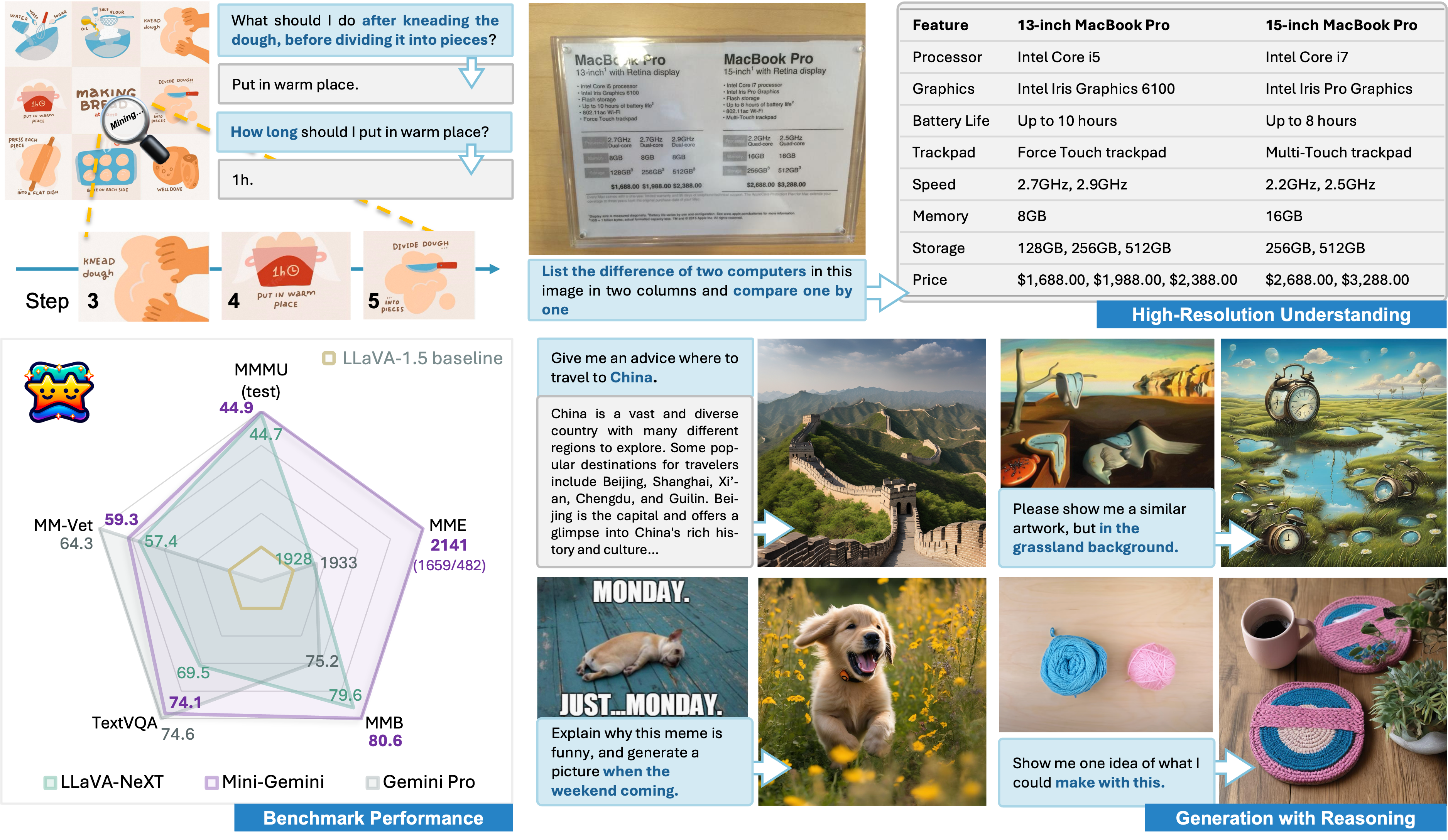

Introducing Mini-Gemini, a simple and effective framework enhancing multi-modality Vision Language Models (VLMs). Mini-Gemini employs an any-to-any paradigm, which is adept at handling both image and text as input and output. In particular, Mini-Gemini introduced an efficient visual token enhancement pipeline for input images, featuring a dual-encoder system. It comprises twin encoders, one for high-resolution images and the other for low-resolution visual embedding, mirroring the cooperative functionality of the Gemini constellation. During inference, they work in an attention mechanism, where the low-resolution one generates visual queries, and the high-resolution counterpart provides candidate keys and values for reference.

To augment the data quality, data based on public resources, including high-quality responses, task-oriented instructions, and generation-related data are being used. The increased amount and quality improve the overall performance and extend the capability of the model.

In general, Mini-Gemini further mines the potential of VLMs and empowers current frameworks with image understanding, reasoning, and generation simultaneously. Mini-Gemini supports a series of dense and MoE Large Language Models (LLMs) from 2B to 34B. It is demonstrated to achieve leading performance in several zero-shot benchmarks and even surpasses the developed private models. For instance Mini-Gemini is on par with Gwen-VL-Plus on the MathVista and MMMU benchmark and even surpasses Gemini Pro and GPT-4V on the widely-adopted MMB benchmark