Mamba-Chat is the first chat language model based on a state-space model architecture, not a transformer.

The model is based on Albert Gu’s and Tri Dao’s work Mamba: Linear-Time Sequence Modeling with Selective State Spaces (paper) as well as their model implementation. This repository provides training / fine-tuning code for the model based on some modifications of the Huggingface Trainer class. Mamba-Chat is based on Mamba-2.8B and was fine-tuned on 16,000 samples of the HuggingFaceH4/ultrachat_200k dataset.

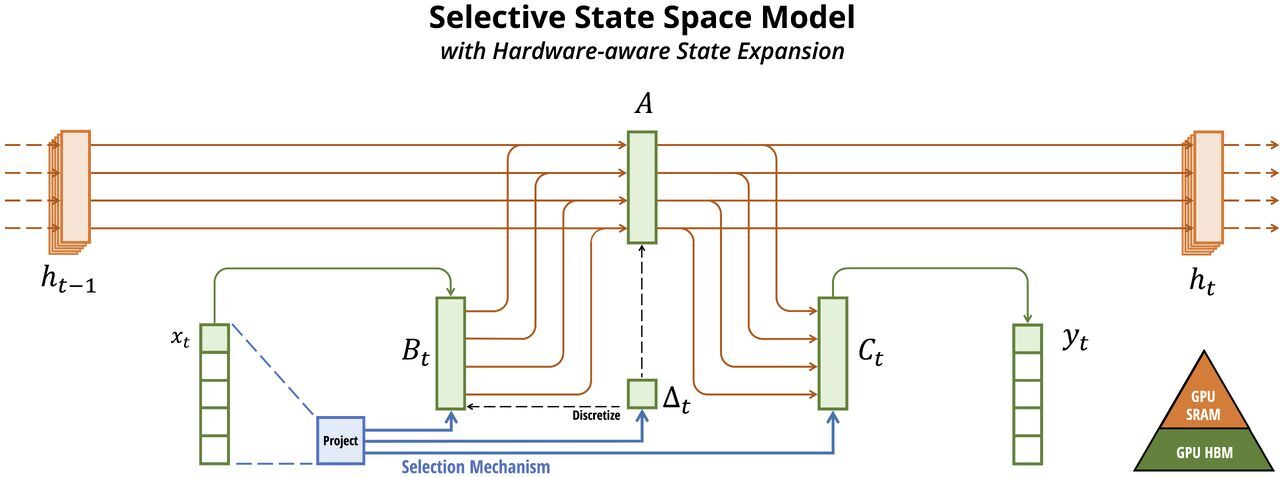

Mamba: Linear-Time Sequence Modeling with Selective State Spaces