Retrieval-augmented generation (RAG) has been pencil in pushing LLM use cases in the Knowledge management system. Nevertheless, existing retrieval-augmented generation approaches are typically similarity-based i.e., they retrieve documents from external corpus based on similarity. Simply aggregating the Top-k document without considering the relationships between them makes it difficult to capture the commonalities and characteristics among them and even confuse LLMs due to excessive text length thus incurring information loss and probably performance degradation

Hence similarity is not always the “panacea” and totally relying on similarity would sometimes degrade the performance of retrieval-augmented generation.

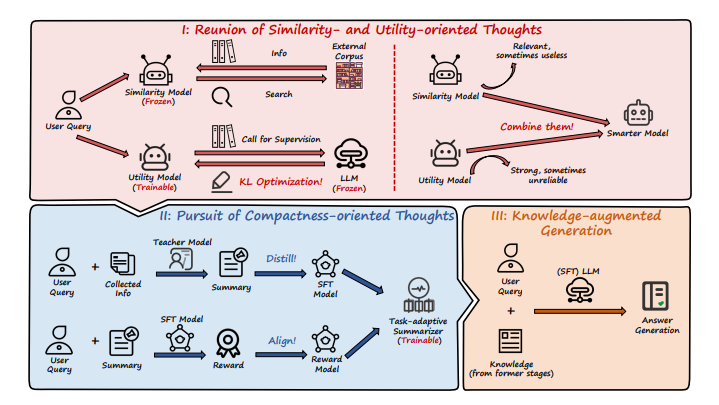

To address this researchers have come up with a novel approach called METRAG, a Multi–layEred Thoughts enhanced RetrievalAugmented Generation framework. METRAG endows retrieval-augmented generation with multi-layered thoughts by firstly embracing LLM’s supervision for utility-oriented thoughts and combining similarity and utility of documents for performance boosting and further pursuing compactness oriented thoughts via a task-adaptive summarizer, finally incorporating the derived multi-layered thoughts for answer generation.

In general, METRAG combines similarity- and utility-oriented approaches. It involves using a similarity model and a utility model to generate summaries, then distilling summary skills from a powerful teacher model (like GPT4). Finally, multiple generated summaries are evaluated using a reward model to improve alignment with the desired task. Extensive experiments on knowledge intensive tasks have demonstrated the superiority of the proposed METRAG.

Paper : https://arxiv.org/pdf/2405.19893