Large Language Models (LLMs) have demonstrated remarkable capabilities across a variety of software engineering and coding tasks. However, their application in the domain of code and compiler optimization remains underexplored.

To address this gap, Meta has introduced a Large Language Model Compiler (LLM Compiler), a suite of robust, openly available, pre-trained models specifically designed for code optimization tasks. LLM Compiler enhances the understanding of compiler intermediate representations (IRs), assembly language, and optimization techniques.

LLM Compiler models are specialized from Code Llama by training on 546 billion tokens of compiler-centric data in two stages. In the first stage the models are trained predominantly on unlabelled compiler IRs and assembly code. In the next stage the models are instruction fine-tuned to predict the output and effect of optimizations. LLM Compiler FTD models are then further fine-tuned on 164 billion tokens of downstream flag tuning and disassembly task datasets, for a total of 710 billion training tokens. During each of the four stages of training, 15% of data from the previous tasks is retained.

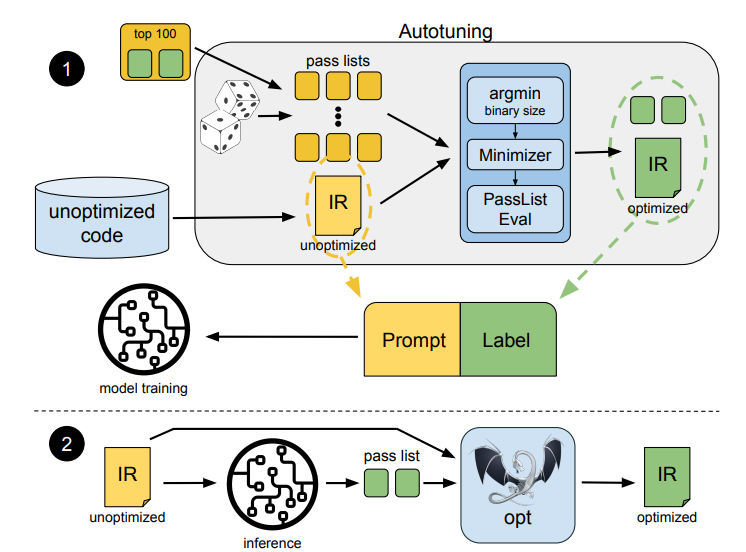

Most interesting thing that stud out was model input (Prompt) and output (Label) during training and inference. The prompt contains unoptimized code. The label contains an optimization pass list, binary size, and the optimized code. To generate the label for the training prompt, the unoptimized code is compiled against multiple random pass lists. The pass list achieving the minimum binary size is selected, minimized and checked for correctness with PassListEval. The final pass list together with its corresponding optimized IR are used as labels during training. In a last step, the top 100 most often selected pass lists are broadcast among all programs. For deployment only the optimization pass list was generated which was fed into the compiler, ensuring that the optimized code is correct.

Paper : link