Multimodal Large Language Models (MLLMs) have experienced significant advancements recently, largely driven by the integration of pretrained vision encoders with Large Language Models (LLMs). This trend is exemplified by developments in Flamingo, BLIP-2, LLaVA, and MiniGPT-4. However, challenges persist in accurately recognizing and comprehending intricate details within high-resolution images. This is attributed to pretrained Vision Transformer (ViT) encoders used by MLLMs, where low resolution suffices for basic image-level semantic understanding but is inadequate for detailed, region-level analysis.

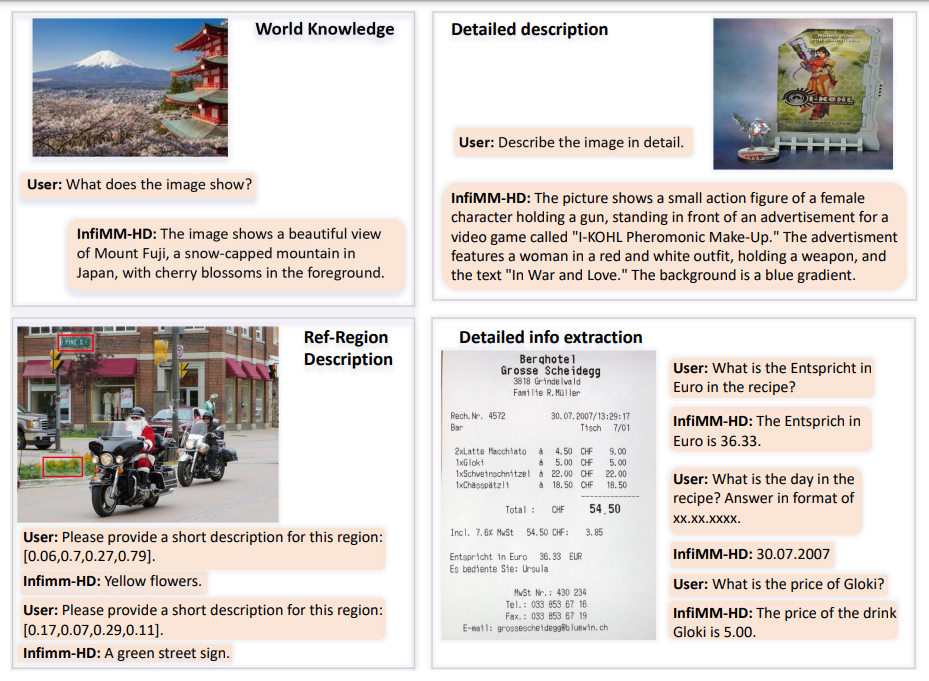

To address these challenges, researchers introduce InfiMM-HD, a novel architecture specifically designed for processing images of high resolutions with low computational overhead. InfiMM-HD consists of three components: a Vision Transformer Encoder, a Gated Cross Attention Module, and a Large Language Model. InfiMM-HD employs a cross attention mechanism to seamlessly integrate visual information with language models in a low-dimensional space. To address the formidable computational demands associated with high-resolution images, it partitioned the input high resolution image into smaller sub-images, each subjected to individual processing using a shared Vision Transformer (ViT) specifically tailored for relatively lower resolutions.

Further, researcher also introduced a four-stage training pipeline that effectively achieves a high-resolution Multimodal Large Language Model with reduced training cost, from initial low-resolution pretraining stage, to continue pretraining stage for knowledge injection and alignment, to dynamic resolution adaption stage for high resolution adoption and finally go through visual instruction fine-tuning stage.

During evaluation under Visual Question Answering (VQA) and text oriented VQA tasks InfiMM-HD outperformed its closest competitor by an average margin of 3.88%. InfiMM-HD was also evaluated on recently proposed MLLMs evaluation benchmarks, including MMMU, MMVet, InfiMM-Eval, MMB, MME, and POPE. Overall InfiMM-HD demonstrates commendable overall performance, highlighting its adaptability and competence across diverse disciplines.

Paper : https://arxiv.org/pdf/2403.01487.pdf