Training Large Language Models (LLMs) is challenging due to memory constraints from weight and optimizer size. Low-rank adaptation (LoRA) addresses this by adding trainable low-rank matrices to frozen pre-trained weights, reducing parameters and memory usage. However for fine-tuning, LoRA is not shown to reach a comparable performance as fullrank fine-tuning. For pre-training from scratch, it is shown to require a full-rank model training as a warmup, before optimizing in the low-rank subspace. There are two possible reasons: (1) the optimal weight matrices may not be low-rank, and (2) the reparameterization changes the gradient training dynamic.

To address this researchers have introduced Gradient Low-Rank Projection (GaLore), a training strategy that allows full-parameter learning but is more memory efficient than common low-rank adaptation methods such as LoRA. GaLore takes a novel approach compared to methods like LoRA and ReLoRA. Instead of doing low-rank projection in weight space (W = W0 + BA) and baking this into the weights every T steps, GaLore performs low-rank projection in gradient space: G = P @ G' @ Q^T. i.e, GaLore recomputes the projection matrices P and Q every T steps using the SVD of the full gradient. This allows the low-rank subspace to maximally adapt to the changing gradient distribution during training.

Since GaLore computes full gradients first and uses them to update the projection matrices every T steps. This means that it has only one matrix for the gradients (G) instead of two like in LoRA. Well, P and Q must be stored, but they aren’t optimized.

The memory savings in GaLore come from the reduction in optimizer state and the lack of newly introduced weights. In their implementation, they only use P for further savings. This is different from LoRA where the savings only come from the low-rank approx of the weights.

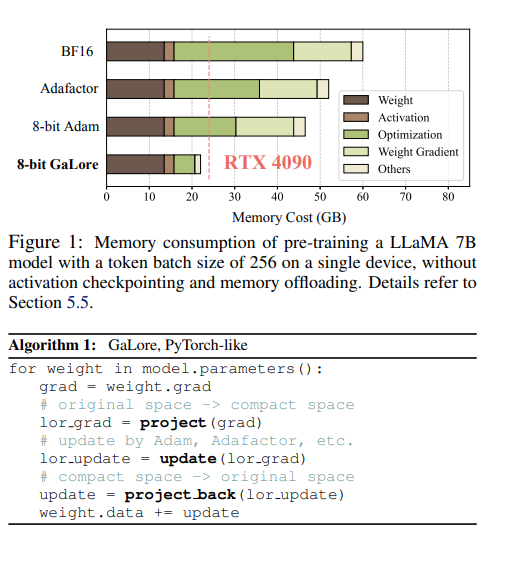

Our approach reduces memory usage by up to 65.5% in optimizer states while maintaining both efficiency and performance for pre-training on LLaMA 1B and 7B architectures with C4 dataset with up to 19.7B tokens, and on fine-tuning RoBERTa on GLUE tasks. Our 8-bit GaLore further reduces optimizer memory by up to 82.5% and total training memory by 63.3%, compared to a BF16 baseline. Notably, it demonstrates, for the first time, the feasibility of pre-training a 7B model on consumer GPUs with 24GB memory (e.g., NVIDIA RTX 4090) without model parallel, checkpointing, or offloading strategies.