Foundation models across the vision and language domains, such as GPT4, DALLE-3, SAM and LLaMA etc., have demonstrated significant advancements in addressing open-ended visual question-answering (VQA) .

However, the process of training individual foundation models has become remarkably costly. Furthermore, the full potential of these models remains untapped due to limitations in their fixed output modalities (i.e. text output for Q&A and visual output for image generation). Although techniques such as prompt engineering and adaptive tuning have shown promising results, these approaches struggle with integrating different foundation models off the shelf, expanding the output types and task objectives.

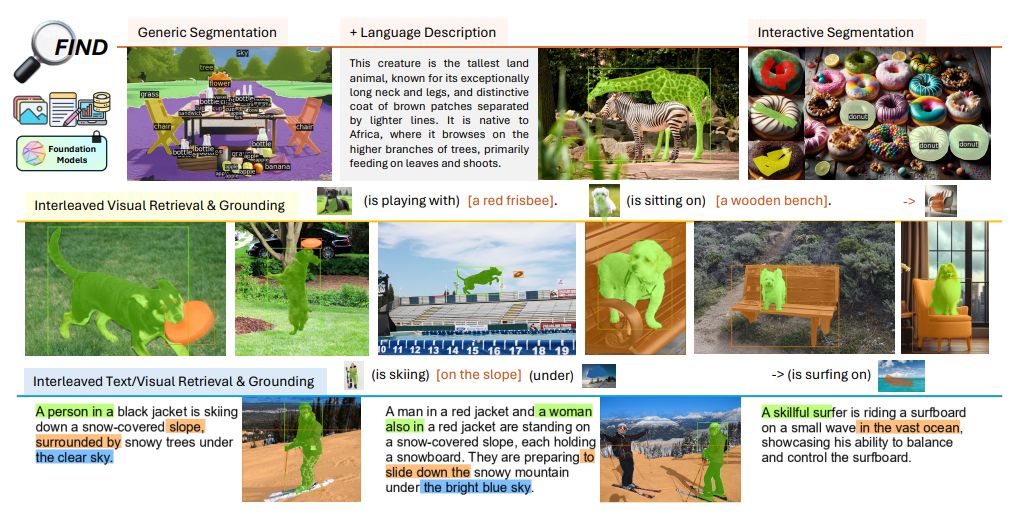

Paper proposes FIND - a generalized interface for aligning foundation models’ embeddings. The interface enables task-adaptive prototyping, which means we only need to change the configure file instead of the model architecture when adapting to the new tasks. Because all the vision-language tasks are trained in a unified way, this creates an interleaved shared embedding space where vision and language references are replaceable and addable. The proposed interface has the following favorable attributes: 1. Generalizable. It applies to various tasks spanning retrieval, segmentation, etc., under the same architecture and weights. 2. Prototypable. Different tasks are able to be implemented through prototyping attention masks and embedding types. 3. Extendable. The proposed interface is adaptive to new tasks, and new models. 4. Interleavable. With the benefit of multi-task multi-modal training, the proposed interface creates an interleaved shared embedding space.

Furthermore, FIND has achieved SoTA performance on interleaved image retrieval and segmentation and shows better or comparable performance on generic/interactive/grounded segmentation and image-text retrieval.