In recent years, text-to-image (T2I) generation models such as DreamBooth and BLIP-Diffusion have rapidly evolved, generating intricate and highly detailed images that often defy discernment from real-world photographs. Yet encounter limitations due to their intensive fine-tuning demands and substantial parameter requirements. While the low-rank adaptation (LoRA) module within DreamBooth offers a reduction in trainable parameters, it introduces a pronounced sensitivity to hyperparameters, leading to a compromise between parameter efficiency and the quality of T2I personalized image synthesis.

To address these constraints, researcher has introduce DiffuseKronA, a novel Kronecker product-based adaptation module that not only significantly reduces the parameter count by up to 35% and 99.947% compared to LoRA-DreamBooth and the original DreamBooth, respectively, but also enhances the quality of image synthesis.

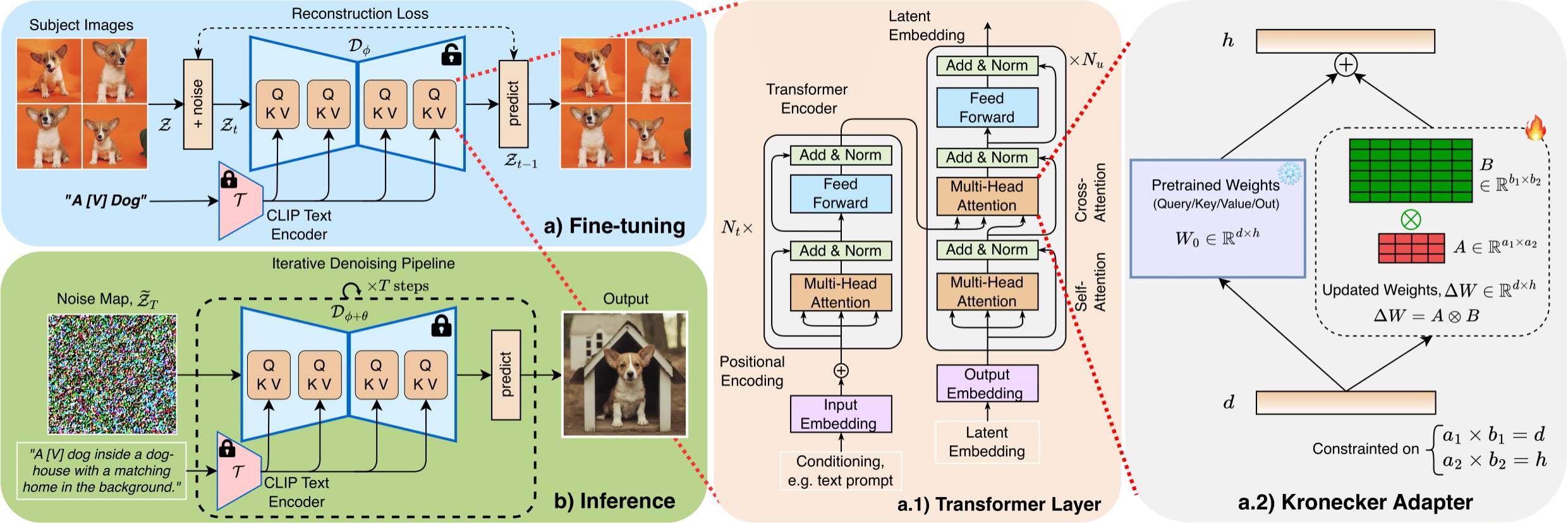

The main idea of DiffuseKronA is to leverage the Kronecker product to decompose the weight matrices of the attention layers in the UNet model. Kronecker Product is a matrix multiplication method that captures structured relationships and pairwise interactions between elements of two matrices. In contrast to the low-rank decomposition in LoRA, the Kronecker Adapter in DiffuseKronA offers a higher-rank approximation with less parameter count and greater flexibility.

During experimentation performance of DiffuseKronA was compared LoRA-DreamBooth under the following criteria:

Enhanced Stability: DiffusekronA is more stable compared to LoRA-DreamBooth. Stability refers to variations in images generated across different learning rates and Kronecker factor/ranks, which makes LoRA-DreamBooth harder to fine-tune.

Text Alignment and Fidelity: On average, DiffusekronA captures better subject semantics and large contextual prompts.

Interpretability: Leverages the advantages of the Kronecker product to capture structured relationships in attention-weight matrices. More controllable decomposition makes DiffusekronA more interpretable.

All in all, DiffusekronA outperforms LoRA-DreamBooth in terms of visual quality, text alignment, fidelity, parameter efficiency, and stability.