Recent releases of advanced multimodal LLMs such as GPT-4V and Gemini version pro have led to breakthroughs in visual and code generation understanding. This has opened up new possibilities in front-end development, where such multimodal large language models (LLMs) have the potential to translate visual designs into code directly, streamlining the front-end engineering process.

So can we take a screenshot of the website design and give this image to LLMs to obtain the full code implementation that can render into the desired web page in a fully end-to-end manner ?

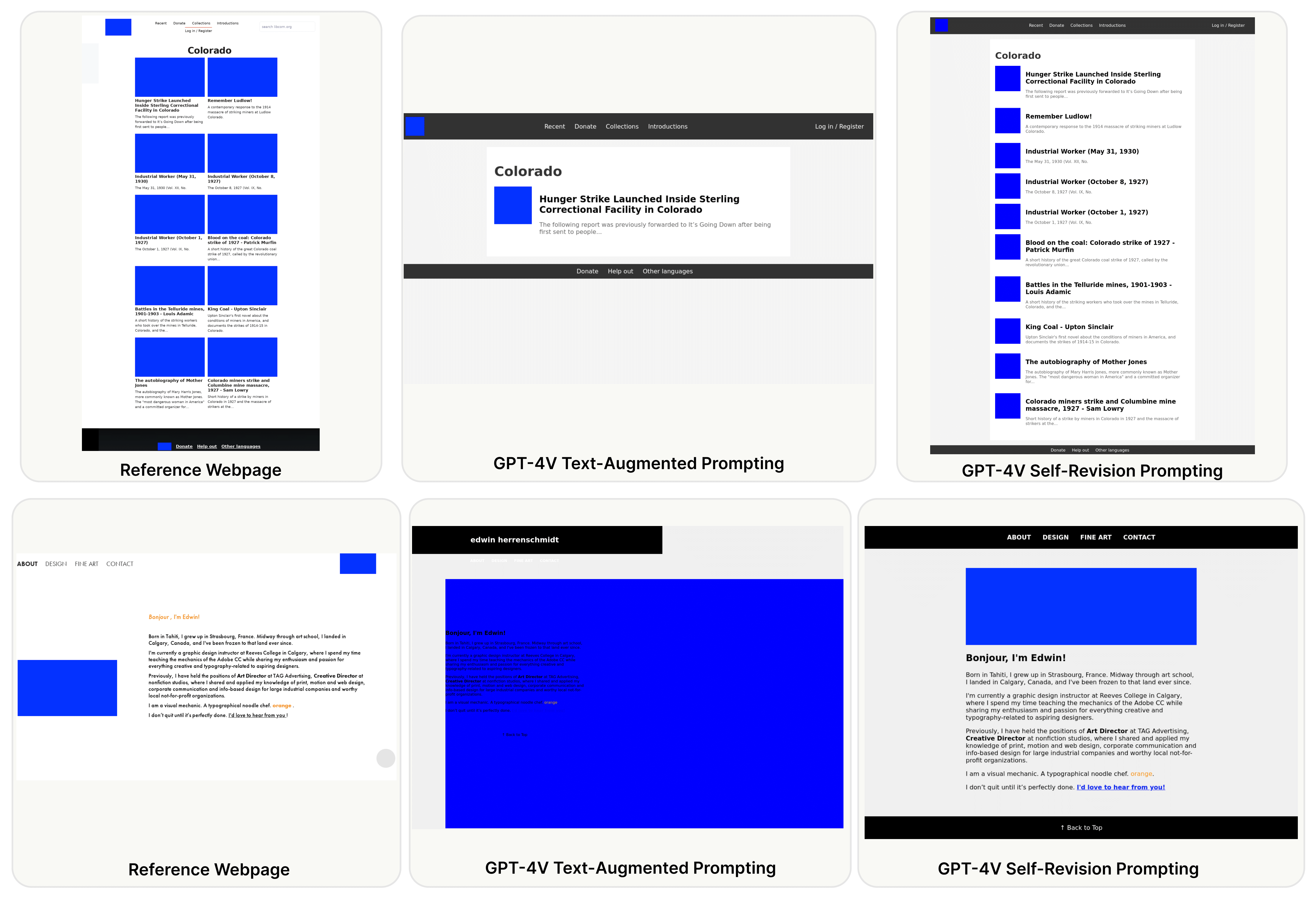

That’s what researchers tried to answer with Design2Code task, which provides the first systematic study on this visual design to code implementation task. Researcher introduce Design2Code benchmark, a curated list of 484 real-world webpages as benchmark test cases and develop a set of automatic evaluation metrics to assess how well current multimodal LLMs can generate the code implementations that directly render into the given reference webpages, given the screenshots as input. Further, a suite of multimodal prompting methods such as Direct Prompting, Text-Augmented Prompting and Self-Revision Prompting were also developed, which show their effectiveness on GPT-4V and Gemini Vision Pro.

Finally, researchers finetune an open-source Design2Code-18B model, with CogAgent-18B as base model, that successfully matches the performance of Gemini Pro Vision. Both human evaluation and automatic metrics show that GPT-4V is the clear winner on this task, where annotators think GPT-4V generated webpages can replace the original reference webpages in 49% cases in terms of visual appearance and content; and perhaps surprisingly, in 64% cases GPT-4V generated webpages are considered better than even the original reference webpages. fine-grained break-down metrics indicate that open-source models mostly lag in recalling visual elements from the input webpages and in generating correct layout designs, while aspects like text content and coloring can be drastically improved with proper finetuning.