If you are doing prompt engineering for LLMs then you might have come across Chain of Thought (CoT) prompting, which is significant in improving the reasoning abilities of LLMS. However, the correlation between the effectiveness of CoT and the length of reasoning steps in prompts remains largely unknown.

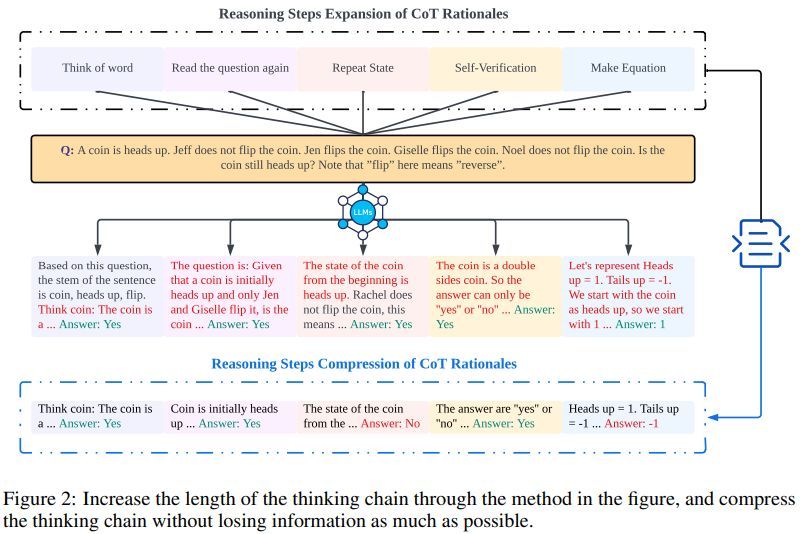

Length of reasoning steps/chains in prompting impacts the performance of large language models (LLMs) on tasks requiring reasoning abilities. Experiments show that increasing the number of reasoning steps in prompts, even without adding new information, significantly improves LLM performance across multiple datasets. Shortening steps diminishes performance. Surprisingly, incorrect rationales can still yield good results if they maintain sufficient step length, suggesting step length is more important than factual accuracy. The benefits of longer steps scale with task complexity: simpler tasks require fewer steps while complex tasks gain more from longer chains. Zero-shot prompting can also be improved by lengthening initial prompts to encourage more reasoning (e.g. “Think step-by-step, think more steps”). Compressing step lengths undermines few-shot CoT (chain-of-thought) performance, regressing it to zero-shot levels. Bigger LLMs require fewer steps to reach peak performance compared to smaller models, showing a relationship between model size and optimal step count. Altering questions within prompts has minimal impact, suggesting step length rather than question details primarily drives reasoning.