The ability to comprehend and process long-context information is essential for large language models (LLMs) to cater to a wide range of applications effectively. Finetuning is one way to improve LLM long-context capability but due to the high cost of continual pretraining on longer sequences, previously released long-context models are typically limited to scales of 7B/13B.

To address this, researchers have introduced Dual Chunk Attention (DCA), a new training-free framework to extrapolate the context window of LLMs. Inspired by efficient chunk-based attention patterns, DCA segments self-attention computations for a long sequence into small chunks, each chunk being smaller than the size of the pretraining window. DCA consists of three components: (1) intra-chunk attention, tailored for processing tokens within the same chunk; (2) inter-chunk attention, for processing tokens between distinct chunks; and (3) successive chunk attention, for processing tokens in successive, distinct chunks. These respective treatments help the model effectively capture both long-range and short-range dependencies in a sequence. In addition to that, the chunk-based attention calculation can be seamlessly integrated with Flash Attention 2, a key element for long-context scaling in the open-source community.

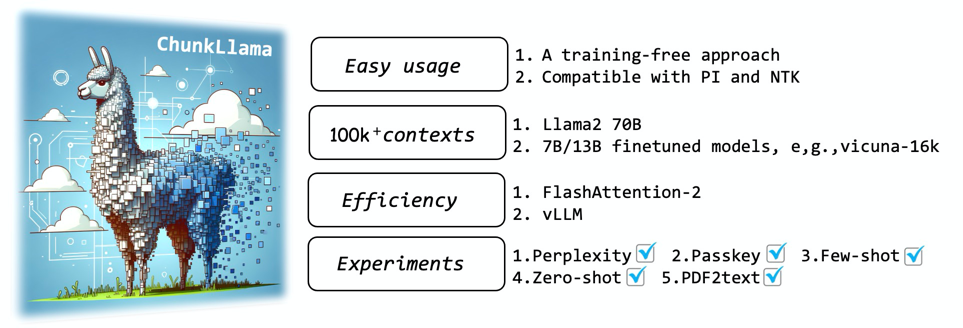

Dual chunk attention provides a training-free and effective method for extending the context window of large language models (LLMs) to more than 8x times their original pre-training length. DCA can be seamlessly integrated with (1) popular extrapolation methods such as Positional Interpolation (PI) and NTK-Aware RoPE; and (2) widely-used libraries for memory-efficient inference like FlashAttention and vLLM.

DCA achieves performance on practical long-context tasks that is comparable to or even better than that of fine tuned models. When compared with proprietary models, ChunkLlama2 training-free 70B model attains 94% of the performance of gpt-3.5-16k, indicating it is a viable open-source alternative