The Mixture of Experts (MoE) framework has become a popular architecture for large language models due to its superior performance over dense models. However, training MoEs from scratch in a large-scale regime is prohibitively expensive. Existing methods mitigate this by pre-training multiple dense expert models independently and using them to initialize an MoE. This is done by using experts’ feed-forward network (FFN) to initialize the MoE’s experts while merging other parameters. However, this method limits the reuse of dense model parameters to only the FFN layers, thereby constraining the advantages when “upcycling” these models into MoEs.

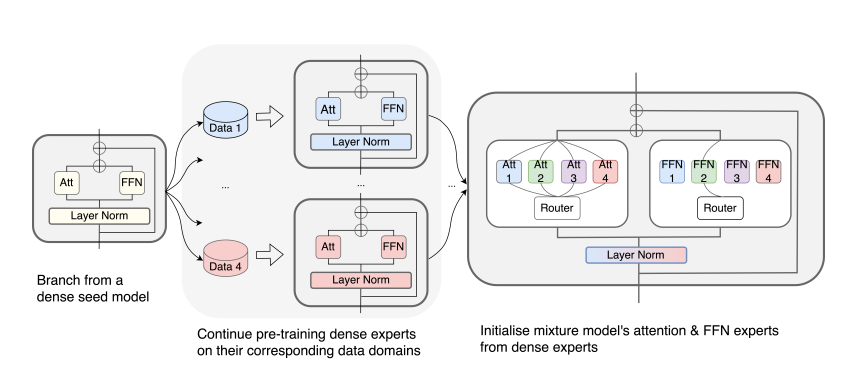

To address this shortcoming, researchers have proposed BAM (Branch-Attend-Mix), a simple yet effective method that makes full use of specialized dense models by not only using their FFN to initialize the MoE layers but also leveraging experts’ attention parameters fully by initializing them into a soft-variant of Mixture of Attention (MoA) layers. BAM operates in three phases. 1) Branching: Begin with a pre-trained dense seed model and create N copies of it. 2) Continued Pre-training: Continue to pre-train each copy independently on its own data mixture. This process yields specialized dense expert models. 3) Mixture Model Training: Utilize these specialized dense expert models to initialize both the FFN and attention experts of the mixture model. The router layers are initialized randomly. All other parameters are derived by averaging the corresponding layers in each of the dense experts. To further improve efficiency, BAM adopted a parallel attention transformer architecture to MoEs, which allows the attention experts and FFN experts to be computed concurrently.

Further, BAM uses two methods for upcycling attention parameters: 1) initializing separate attention experts from dense models including all attention parameters for the best model performance; and 2) sharing key and value parameters across all experts to facilitate for better inference efficiency.

During experiments on seed models ranging from 590 million to 2 billion parameters demonstrate that BAM surpasses baselines in both perplexity and downstream task performance, within the same computational and data constraints.

Paper : https://arxiv.org/pdf/2408.08274