Long sequence LLMs are some of the challenging models to work around as memory plays a crucial role processing extremely long contexts and utilizing remote past information. Various methods have emerged for extending transformers context length, including approaches based on transformer segment-level recurrence but none are able to successfully mitigate it.

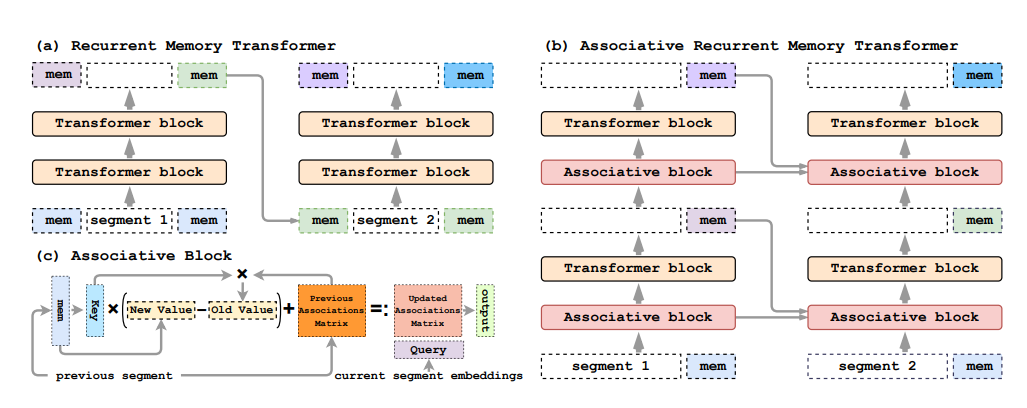

Now researchers have come up with a novel model called Associative Recurrent Memory Transformer (ARMT), a memory-augmented segment-level recurrent Transformer based on Recurrent Memory Transformer. Compared to RWKV and Mamba, which use association-based techniques, ARMT benefits from full local self attention and has constant time and space complexity of processing new segments, similar to RMT.

During experimentation ARMT outperformed existing alternatives in associative retrieval tasks and set a new performance record in the recent BABILong multi-task long-context benchmark by answering single-fact questions over 50 million tokens with an accuracy of 79.9%.

Paper : https://arxiv.org/pdf/2407.04841

Code : https://github.com/RodkinIvan/associative-recurrent-memory-transformer